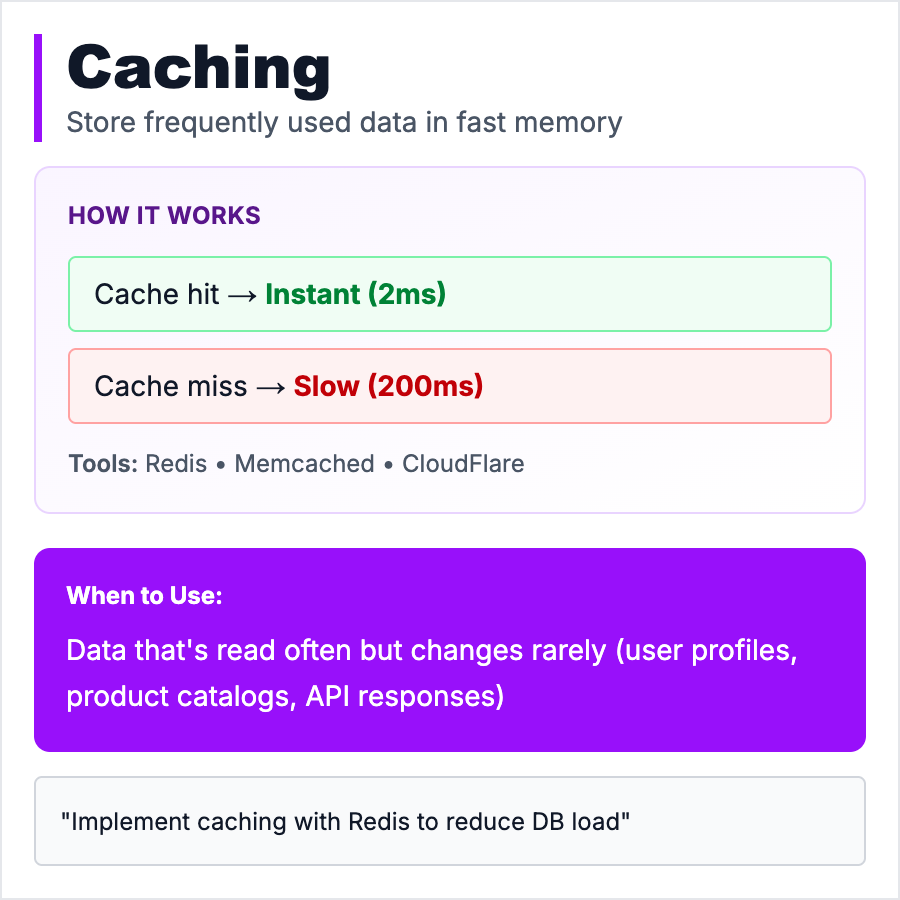

What is Caching?

Caching stores frequently-accessed data in fast storage (memory) instead of slow storage (database/disk). Like keeping common items on your desk instead of in the filing cabinet. Every time you fetch user profile from database it takes 50ms. With caching: first request 50ms, next 1000 requests <1ms. Huge performance win. Common tools: Redis, Memcached, CDN caching. The hard part: cache invalidation (keeping cached data fresh).

When Should You Use This?

Cache when you have expensive operations (database queries, API calls, computations) that are called frequently with the same inputs. Great for: user sessions, product catalogs, homepage data, API responses. Don't cache: real-time data (stock prices), user-specific data that changes often, or data where staleness is unacceptable. Start caching when pages load slowly despite database optimization.

Common Mistakes to Avoid

- •Caching everything—cache expensive operations, not cheap ones

- •No expiration—stale data forever, users see old info

- •Cache stampede—cache expires, 1000 requests hit database simultaneously

- •Wrong cache level—caching HTML when you should cache database queries

- •Not monitoring hit rate—if cache hit rate is 20%, you're caching wrong things

Real-World Examples

- •Reddit—Caches homepage posts, refreshes every few seconds, serves millions without hitting DB

- •Twitter—Timelines cached in Redis, handles billions of requests

- •Shopify—Product catalog cached at CDN edge, instant page loads globally

- •News sites—Articles cached for hours, breaking news has short cache times

Category

System Design Patterns