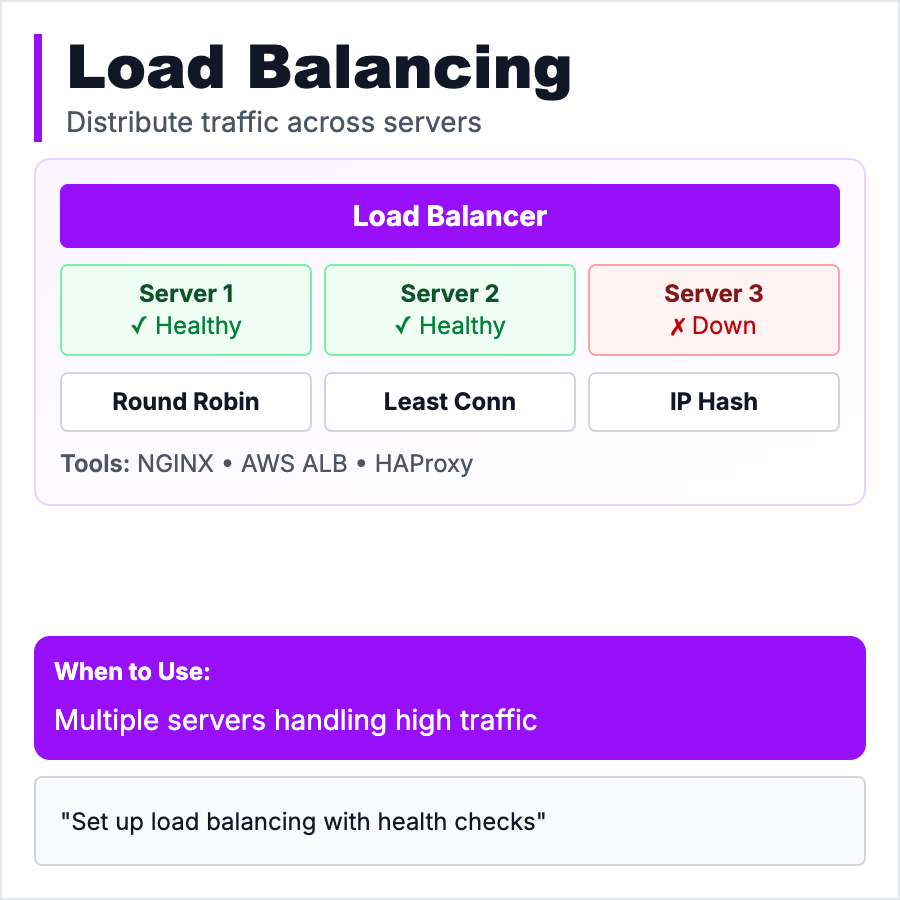

What is Load Balancing?

Load Balancing distributes incoming requests across multiple servers so no single server gets overwhelmed. Think of it as a traffic cop directing cars to different lanes—users don't notice which server handles their request, they just get fast responses. Essential for high-traffic apps, redundancy, and zero-downtime deploys. Common strategies: Round Robin (each server in order), Least Connections (send to least busy), IP Hash (same user always to same server).

When Should You Use This?

Use load balancing when one server can't handle all traffic, when you need redundancy (if one server dies, others keep working), or when you want zero-downtime deploys (deploy to servers one at a time). Start simple with services like Vercel/Netlify that handle it automatically. Add explicit load balancers (AWS ALB, nginx) when you have multiple backend servers.

Common Mistakes to Avoid

- •Adding load balancer before you need it—single server is fine until ~10k requests/min

- •No health checks—load balancer sends traffic to dead servers

- •Session stickiness wrong—user sessions break when requests go to different servers

- •Not monitoring distribution—one server gets 80% of traffic defeats the purpose

- •Forgetting SSL termination—handle HTTPS at load balancer, not each server

Real-World Examples

- •Netflix—Load balancers distribute millions of requests across thousands of servers

- •Stripe—Uses load balancing for API redundancy and zero-downtime deploys

- •Vercel—Auto-scales Next.js apps with built-in load balancing

- •E-commerce—Black Friday traffic handled by spinning up more servers behind load balancer

Category

System Design Patterns