What is Database Sharding?

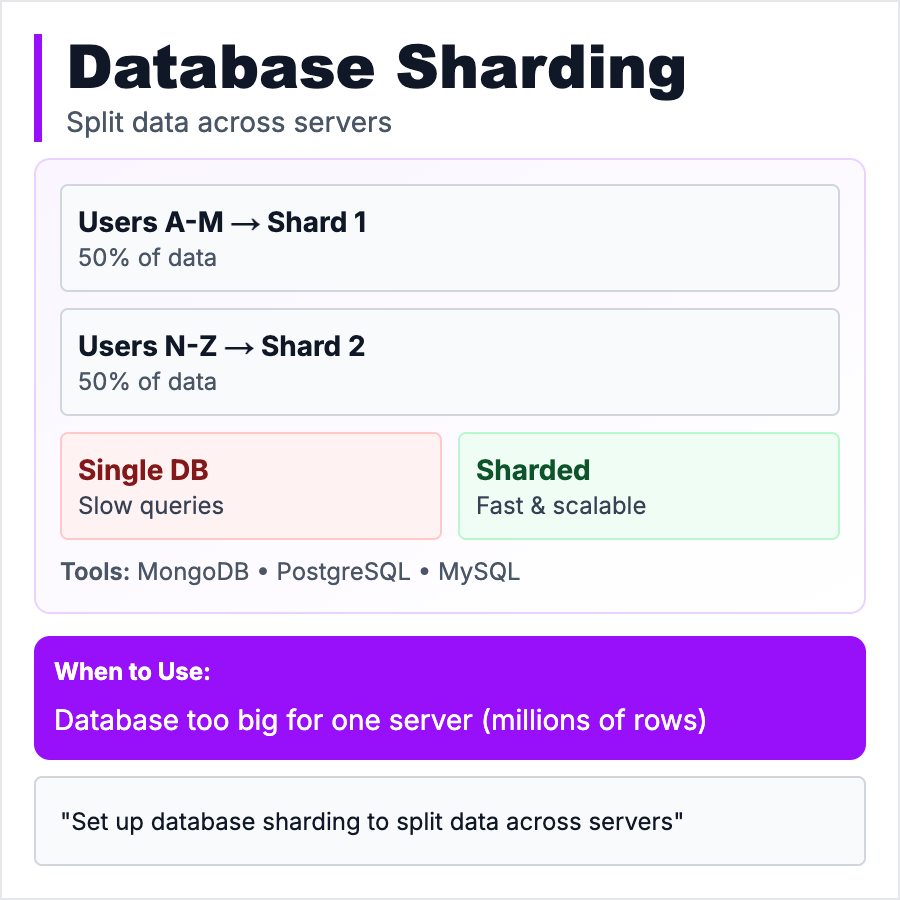

Database Sharding splits your data across multiple databases—each "shard" holds a portion of your data. Users 1-1M on Shard 1, users 1M-2M on Shard 2, etc. Unlike replication (same data copied to many servers), sharding splits different data to different servers. Allows infinite scaling but adds complexity. Most startups never need sharding—vertical scaling and read replicas handle 99% of cases.

When Should You Use This?

Only shard when you've maxed out vertical scaling (biggest database server available) and read replicas, when you have >10TB of data, or when single-database writes can't keep up. Most startups never reach this point. Before sharding: optimize queries, add indexes, use caching, scale vertically, add read replicas. Sharding is a last resort—it adds huge operational complexity.

Common Mistakes to Avoid

- •Sharding too early—99% of startups don't need it, adds massive complexity

- •Wrong shard key—poor key causes uneven distribution (one shard has 80% of data)

- •Cross-shard queries—queries across shards are slow, design to avoid them

- •No resharding plan—what happens when shards get full again?

- •Ignoring simpler solutions—read replicas + caching handles most scale problems

Real-World Examples

- •Instagram—Sharded by user ID when they hit billions of users

- •Notion—Started with single Postgres, added sharding after explosive growth

- •Discord—Shards messages by server ID, each Discord server on one shard

- •Pinterest—Sharded by board ID to scale pins across databases

Category

System Design Patterns