What is Fine-Tuning (AI)?

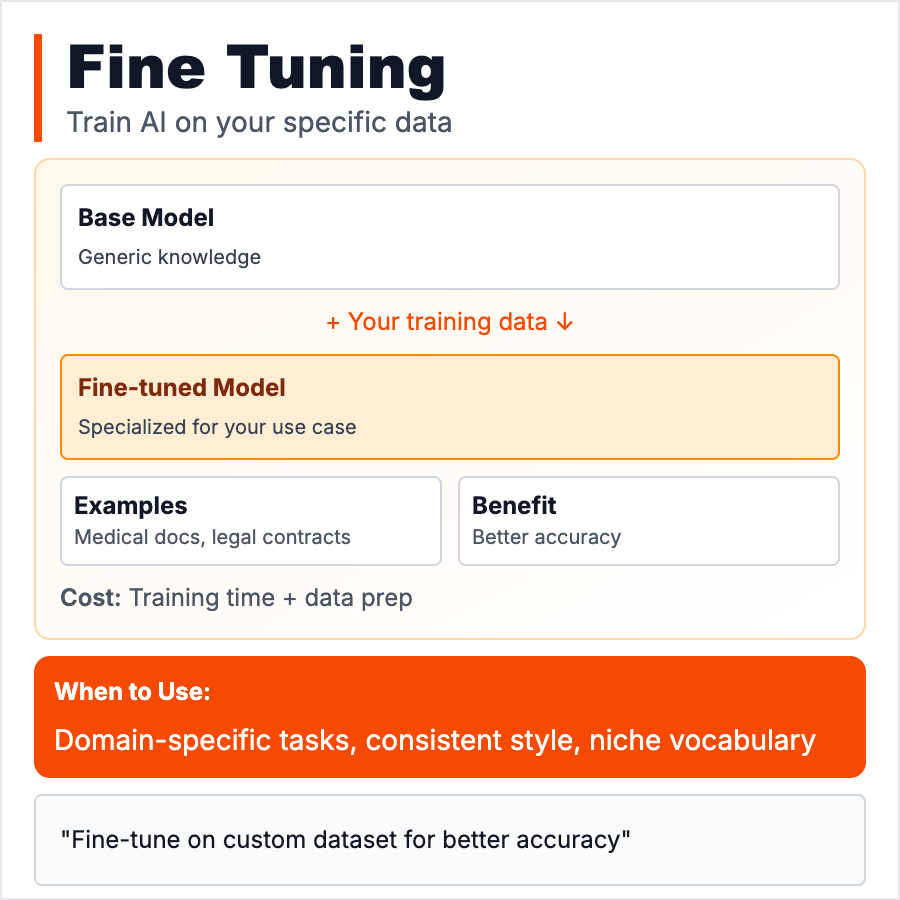

Fine-Tuning means training an existing AI model on your own data to customize its behavior. Takes a base model (GPT-4, Claude) and teaches it your specific patterns, style, or knowledge. More permanent than prompting—changes model weights. Requires 100-10,000 examples and costs money/time to train. Result: model that behaves consistently according to your needs. Use when prompting isn't good enough and you need permanent customization.

When Should You Use This?

Fine-tune when: you need consistent behavior across thousands of calls, have 100+ high-quality training examples, few-shot prompting isn't accurate enough, or want to reduce prompt size (behavior baked into model). Don't fine-tune when: prompting works fine (cheaper, faster), you have <50 examples, or requirements change frequently. Fine-tuning is for production-scale, stable use cases.

Common Mistakes to Avoid

- •Fine-tuning too early—try prompting first, it's easier and often good enough

- •Not enough data—need 100+ diverse, high-quality examples minimum

- •Bad training data—garbage in, garbage out. Clean and validate examples

- •Wrong use case—fine-tuning doesn't add facts (use RAG), it changes behavior/style

- •No evaluation—test fine-tuned model thoroughly before production

Real-World Examples

- •Customer support—Fine-tune on 1000 support interactions for consistent tone

- •Code generation—Fine-tune on company codebase for your style/conventions

- •Classification—Fine-tune for domain-specific classification (medical, legal)

- •Content moderation—Fine-tune on your moderation guidelines and examples

Category

Ai Vocabulary