What is Rate Limiting?

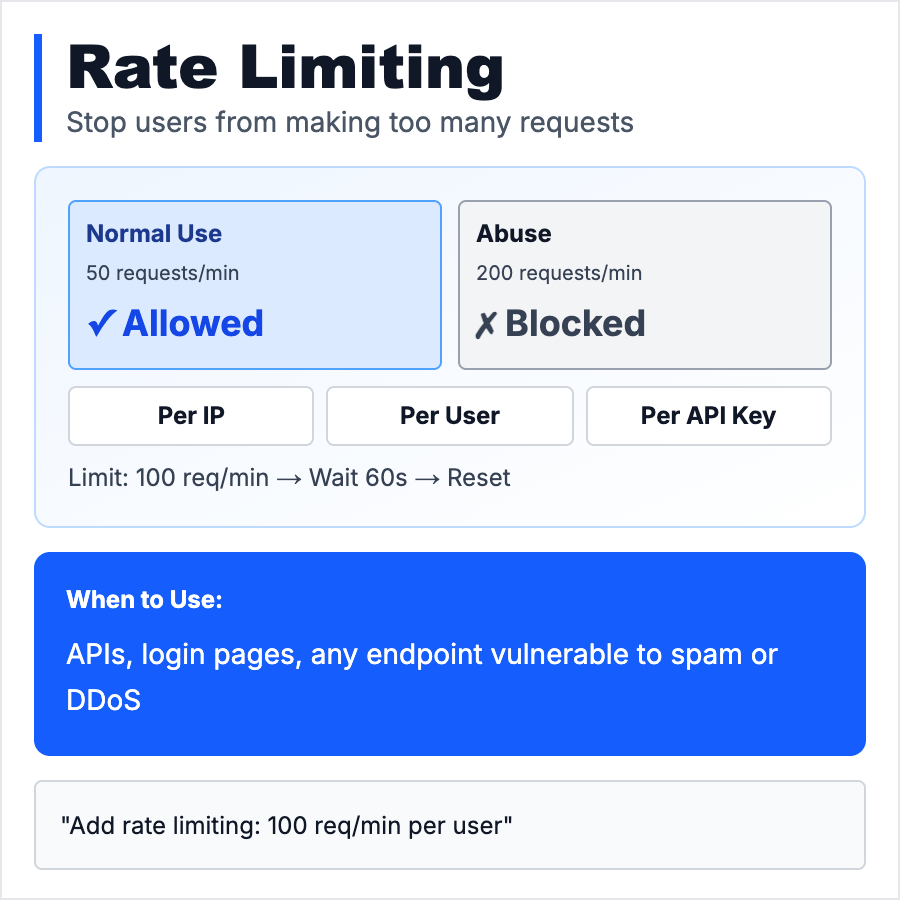

Rate Limiting restricts how many requests a user can make to your API in a time window (e.g., 100 requests per minute). Prevents abuse (scraping, brute-force attacks), protects your infrastructure from overload, and ensures fair usage. Common strategies: per IP, per user, per API key. Return 429 (Too Many Requests) when limit is hit.

When Should You Use This?

Add rate limiting to all public APIs, login endpoints (prevent brute-force), and expensive operations (AI inference, database queries). Use tools like Upstash (serverless rate limiting), Redis, or API gateways (Cloudflare, AWS API Gateway). For login endpoints, use aggressive limits (5 attempts per minute). For public APIs, use tiered limits (free tier: 100/hour, paid: 10k/hour).

Common Mistakes to Avoid

- •Rate limiting by IP only—users behind NAT share IPs; combine IP + user ID + API key

- •Not handling 429 responses—clients should back off exponentially (retry after 1s, 2s, 4s...)

- •Too generous limits—start strict (10/min) and loosen based on real usage data

- •Not rate limiting login/signup—brute-force attacks will try thousands of passwords per second

- •Forgetting about distributed systems—use centralized rate limiting (Redis, Upstash) not in-memory counters

Real-World Examples

- •Stripe API—100 requests per second per API key, returns 429 with Retry-After header

- •GitHub API—5,000 requests per hour for authenticated users, 60/hour for unauthenticated

- •OpenAI API—rate limits by tokens per minute (TPM) and requests per minute (RPM)

- •Cloudflare—DDoS protection with automatic rate limiting for suspicious traffic

Category

Cybersecurity

Tags

rate-limitingapi-securityddos-preventionbrute-forcethrottling