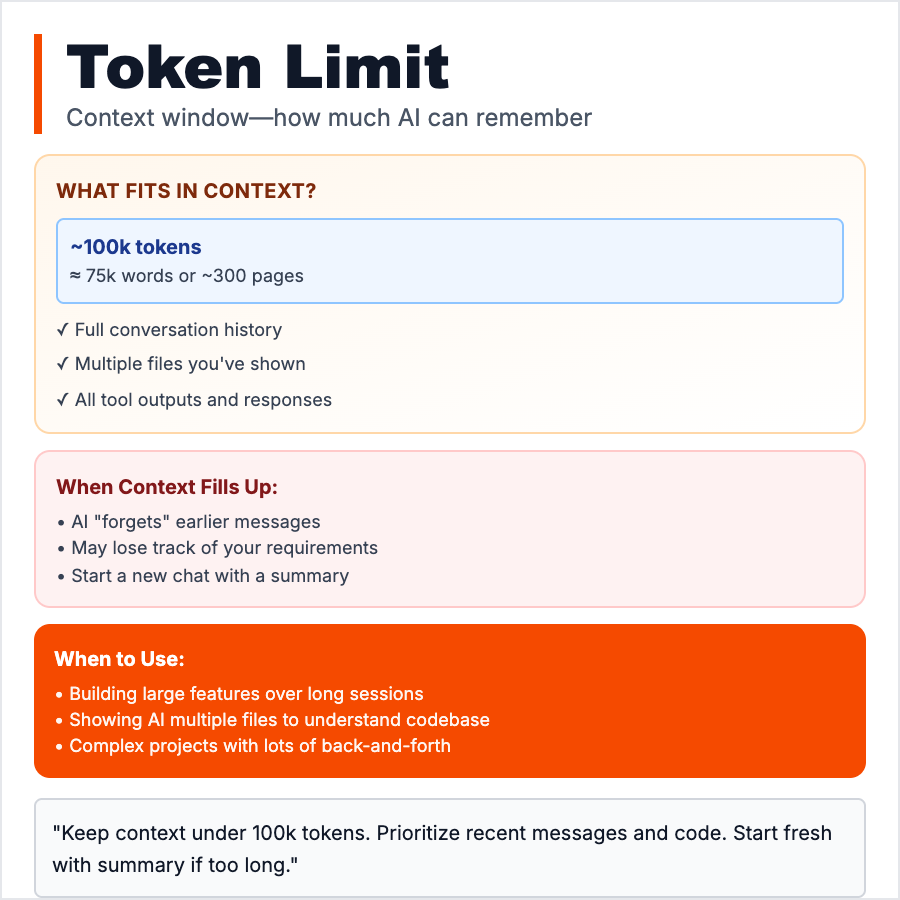

What is Token Limit?

Token Limit (aka Context Window) is the maximum amount of text an AI model can process in a single request—both your input and its output combined. Tokens are chunks of text (roughly 4 characters or 0.75 words in English). GPT-4 has a 8k-128k token limit depending on version; Claude has up to 200k tokens. Hit the limit and the AI either truncates, errors, or forgets earlier parts of the conversation.

When Should You Use This?

You don't "use" token limits—you work within them. Track token usage to avoid hitting limits, optimize prompts to fit more context, chunk large documents into smaller pieces, use summarization to compress info, or upgrade to models with larger context windows. Token limits directly affect cost (you pay per token) and what's possible (can't analyze a 500-page doc in one go on GPT-3.5).

Common Mistakes to Avoid

- •Ignoring token counts—assuming "it'll fit" until you hit errors; use token counters (tiktoken, OpenAI API)

- •Pasting entire codebases—AI can't process 50k lines at once; extract relevant snippets

- •Not summarizing conversations—long chats eat tokens; periodically summarize to free up space

- •Paying for wasted tokens—verbose prompts, repeated context, fluff in responses all cost money

- •Forgetting output tokens count—if input is 7k tokens and limit is 8k, AI can only output 1k tokens

Real-World Examples

- •ChatGPT Plus—GPT-4 Turbo has 128k token limit (~96k words), enough for a short novel

- •Claude—200k token window lets you paste entire codebases or long legal documents

- •Notion AI—chunks large documents to stay under token limits, processes piece-by-piece

- •OpenAI API—charges $0.03/1k tokens (GPT-4), so a 10k token conversation costs $0.30

Category

Ai Vocabulary