What is Top-K Sampling?

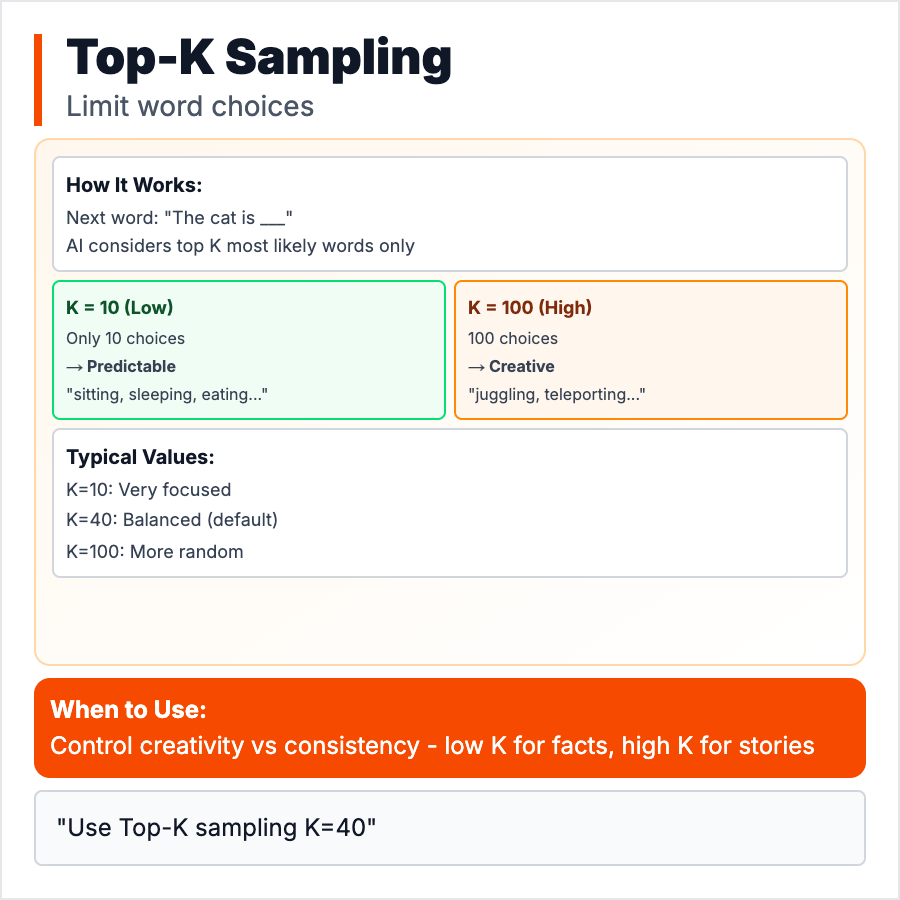

Top-K Sampling limits the AI to choosing from the K most likely next words. Top-K=1: always picks the most likely word (deterministic). Top-K=50: chooses from top 50 options (more random). Higher K = more creative but less focused. Lower K = more predictable. Often used with Temperature. Most developers use Top-P (nucleus sampling) instead—it's more flexible. Top-K is simpler but cruder.

When Should You Use This?

Most people don't adjust Top-K—use Temperature or Top-P instead, they're better. If you do use Top-K: set low (1-10) for factual tasks, high (50-100) for creative writing. OpenAI uses Top-P by default. Anthropic exposes Top-K. Don't tweak unless you understand the tradeoffs—Temperature is easier. Only adjust if default outputs aren't working.

Common Mistakes to Avoid

- •Adjusting without testing—changing Top-K can make outputs worse, test first

- •Using with Temperature—they interact weirdly, adjust one at a time

- •Too low—Top-K=1 makes AI repetitive and boring

- •Too high—Top-K=1000 lets AI pick random nonsense words

- •Not using Top-P instead—Top-P (nucleus sampling) is usually better

Real-World Examples

- •Factual: Top-K=1, Temperature=0 → deterministic, same output every time

- •Balanced: Top-K=40, Temperature=0.7 → creative but coherent

- •Creative: Top-K=100, Temperature=1.0 → very random, might be nonsense

- •Default: Most APIs use Top-P instead, ignore Top-K

Category

Ai Vocabulary

Tags

top-ksamplingai-parameterscreativity-controlllm-tuning