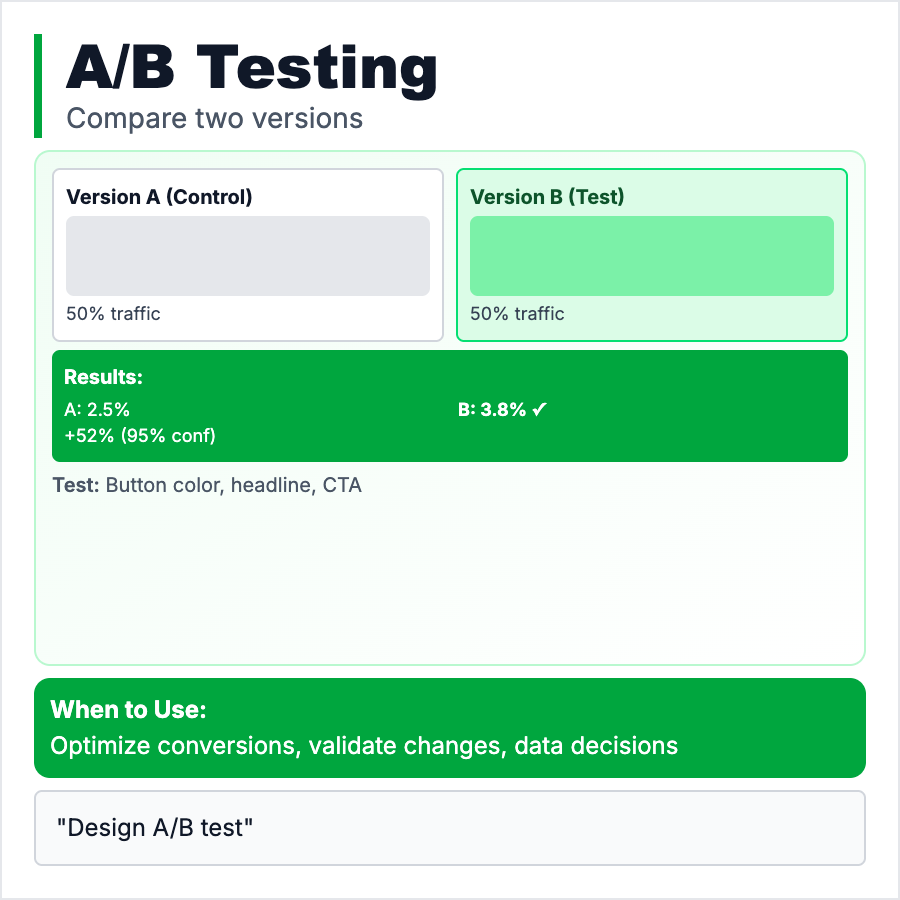

What is A/B Testing?

A/B Testing (split testing) is showing two variants to users randomly and measuring which performs better. Control (A) vs Variant (B). Example: 50% see blue button, 50% see green, measure which converts better. Once you have statistical significance, ship the winner. It removes opinions from product decisions—data decides. Essential for optimization, dangerous for early-stage products (you need traffic first).

When Should You Use This?

Run A/B tests when you have enough traffic (need 100+ conversions per variant for significance), when optimizing existing flows (signup, onboarding, pricing pages), or when you have competing ideas. Don't A/B test before Product-Market Fit—qualitative feedback matters more. Don't test 10 things at once—isolate variables. Use for incremental wins, not radical product changes.

Common Mistakes to Avoid

- •Not enough traffic—A/B tests need statistical significance, often 1000s of users

- •Stopping too early—wait for significance, don't call winner after 1 day

- •Testing too many things—test one variable at a time or you don't know what worked

- •Ignoring long-term effects—green button converts better but churns more

- •Local maxima—A/B testing finds small wins, doesn't invent breakthrough features

Real-World Examples

- •Booking.com—Runs hundreds of A/B tests simultaneously, optimized for conversion

- •Netflix—Tests thumbnails, finds right image increases watch rate 30%+

- •Obama campaign—A/B tested email subject lines, raised millions more

- •Superhuman—Tests onboarding flows to improve activation rate

Category

Product Management